What If You Started Talking to AI Instead of Typing?

How voice-first AI use made me go from frustrated user to true collaborator!

🧠 TL;DR

Typing to AI captures limited context in prompts, leading to sub-par responses. Voice interaction allows sharing complete thought processes while moving freely, and research shows speaking is 3x faster than typing. Voice transforms AI interactions from feeling like programming a machine to collaborating with an intelligent partner that understands full context. Through this approach I have been able to understand complex topics much faster compared to traditional text-based methods.

I cannot sit at a desk and brainstorm; I need to pace around the room, think out loud, and let my thoughts flow naturally. But here's the thing, text-based AI interactions were killing my productivity, I had to either sit at my desk or stare at a 6.7-inch screen.

Often, I type a quick prompt that captures only about 50% of my thoughts in summary form and press Enter. Claude receives only about 50% of the context and returns output that is roughly 50% relevant. Can't really blame the AI here…

Then one day over dinner, a friend casually mentioned that he doesn’t use text to interact with AI anymore and only uses voice. That got me thinking. I was paying for Claude Pro but had never touched the voice feature. So I went home and tried it.

That one switch changed how I learn and work.

🤝 Programming vs. Collaborating

Here's what I realized: When you type to AI, your brain switches into "instruction mode." When you speak to AI, you enter "collaboration mode."

Voice is natural. Typing feels like work, and your brain knows that. When you speak to AI, you're having a conversation. When you type, you're programming a machine.

What immediately struck me was that I was able to articulate my entire thought process and ask more complex questions while providing more context about how I think. So AI is able to address my query from my perspective and the way that I have addressed it.

Additionally, I want to get the neuro benefits of walking and being present in an environment that gives you clear train of thought while interacting with AI, and not stare at my phone.

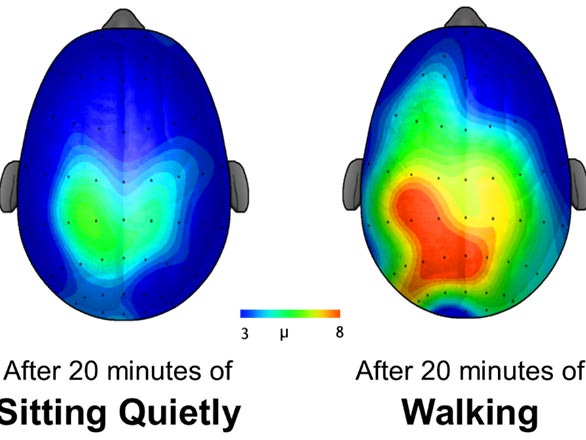

Research from the University of Nebraska shows that just 20 minutes of walking can have a measurable impact on neural activity, likely because of increased oxygen intake. This improves cognitive function and helps you learn better. In the image above, the red areas indicate heightened brain activity.

🧠 The Psychology + Numbers 📊

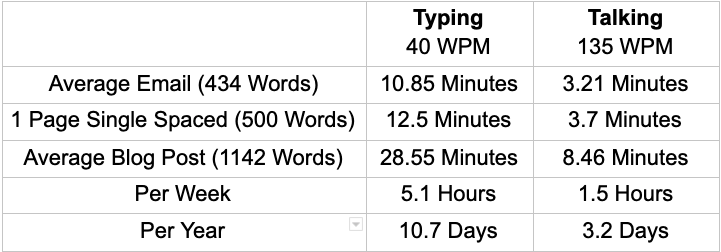

It's not only movement and clarity-in-thought but also input speed that matters. We speak about 135 words per minute but typically type only 40–45 words per minute. That's 3x more context in the same timeframe.

Speaking also unlocks tone, emphasis, filler words like "um" and "actually" that help you build your train of thought and think out loud. Text strips all that away into one instruction blob.

🎙️ The Voice-First Test: Before vs. After

Want to see the difference? Here's the exact same scenario, handled two ways:

Typed Prompt:

Teach me all that I need to know about vector databases. I have a background in SQL and NoSQL databases, how are vector databases different from SQ/NoSQL? What are the main use cases for vector databases and why are they used for AI systems? Give me a short and brief answer.Voice Prompt:

Hey, I’ve been hearing a lot about vector databases. Could you walk me through the concept and what it means? I have some foundational knowledge of SQL and NoSQL, so you can leverage that in your explanation. Also, please explain why vector databases are different from standard database models and why standard database models aren’t ideal for AI applications. Tell me about the key use cases for vector databases too. How do vector databases fit well for AI applications? Can you use examples and also take me through the core concepts and terms I need to know to understand vector databases better? Make your answer short and sweet.The voice version gives Claude context about my existing knowledge, experience, and genuine curiosity. The typed version is just a list of questions. I would probably say that both inputs took me around the same amount of time. Not sure if I need to practice my touch typing 😬.

This is what I got as a response in both cases:

Typed Prompt:

Vector databases are specialized databases designed to store, index, and search **vector embeddings** (high-dimensional numerical arrays that represent data like text, images, or audio in mathematical space).

## Key Differences from SQL/NoSQL:

**Traditional databases** store structured data (rows/columns) or documents and search using exact matches or range queries.

**Vector databases** store embeddings and use **similarity search** - finding vectors that are "close" to each other in high-dimensional space using distance metrics (cosine similarity, Euclidean distance).

## Main Use Cases:

- **Semantic search** - Find documents by meaning, not just keywords

- **Recommendation systems** - "Find similar products/content"

- **Image/video search** - Search by visual similarity

- **Retrieval-Augmented Generation (RAG)** - Help LLMs access relevant context

- **Anomaly detection** - Identify outliers in high-dimensional data

## Why Essential for AI Systems:

1. **LLMs work with embeddings** - AI models convert text/images into vectors to understand meaning

2. **Semantic understanding** - Unlike keyword search, vectors capture context and meaning

3. **Real-time similarity** - Enable "find similar" queries at scale

4. **RAG applications** - Let AI models retrieve relevant information from large knowledge bases

**Bottom line**: Vector databases bridge the gap between how AI models understand data (as vectors) and how we need to store/search that data efficiently. They're crucial infrastructure for modern AI applications that need to find semantically similar content.Voice Prompt:

Vector databases are designed to store and search **vector embeddings** - numerical representations of data (text, images, audio) as arrays of floating-point numbers. Think of embeddings as coordinates in high-dimensional space where similar items cluster together.

## Why Traditional Databases Fall Short for AI

**SQL/NoSQL limitations:**

- Designed for exact matches and structured queries

- Can't measure semantic similarity (e.g., "dog" vs "puppy" appear completely different)

- No built-in support for high-dimensional data operations

- Inefficient for finding "nearest neighbors" in vector space

**Vector databases solve this by:**

- Storing embeddings natively

- Using specialized indexes (like HNSW, IVF) for fast similarity search

- Supporting semantic queries instead of just keyword matching

## Key Concepts

**Embeddings:** Dense numerical vectors representing data meaning (e.g., [0.2, -0.8, 0.5, ...])

**Similarity Search:** Finding vectors closest to a query vector using metrics like cosine similarity or Euclidean distance

**Vector Index:** Data structures optimized for fast approximate nearest neighbor search

## Core Use Cases

1. **Semantic Search:** "Find documents about climate change" matches content about "global warming" or "carbon emissions"

2. **RAG (Retrieval-Augmented Generation):** ChatGPT-style apps that search your documents to provide contextual answers

3. **Recommendation Systems:** "Users who liked X also liked Y" based on embedding similarity

4. **Image/Audio Search:** Find similar images or songs by content, not just metadata

## Example in Action

Instead of SQL's `WHERE title LIKE '%dog%'`, vector databases let you search for "pet animals" and automatically return results about dogs, cats, hamsters - anything semantically related, even without exact keyword matches.

Popular vector databases include Pinecone, Weaviate, and Qdrant.As you can see, the voice response took a more personal approach, leveraging my prior experience and understanding of databases to draw parallels. It also gave me an example of implementing a query in SQL vs. vector databases to help visualize the differences. The text prompt, on the other hand, did answer my question, but was less personalized and more robotic. The voice prompt encouraged further conversation and collaboration

🗣️ What Actually Makes Voice Different

Through trial and error, I discovered what really works. It's not about following some rigid framework, it's about embracing how natural conversation actually flows.

When I started talking to Claude about vector databases while pacing around my room, I could think about what Claude was trying to tell me and then give back insightful questions. All of this happens naturally because voice removes the filter between your brain and AI.

Here's an example how I actually start conversations with Claude now:

Hey, I want you to act as a really smart friend who is a solution architect working at a big tech firm. I want you to treat this as a casual cup of coffee at Starbucks where we are just having a conversation about system design, systems thinking, and the goal is to help me get a foundational grasp of solution architecture if I were to build any application, for example. Let's keep the responses conversational, not formal. If I'm confused, I may interrupt you and ask a few questions. I want you to guide the flow of the conversation to cover key concepts as we progress. Make sure you ask me follow-up questions to ensure that I've understood key concepts along the way. 🔍 What to Keep in Mind

Before diving into voice AI interactions, keep this in mind:

Privacy Matters: Voice recordings may be stored in the cloud. For sensitive work, check data retention policies.

Environment Quality: Background noise and poor audio can impact performance. Ideally, you'd be in a quiet environment with a decent microphone.

Intent: Voice AI might misread sarcasm or urgency. When brainstorming, say things like "just thinking out loud." For urgent tasks, say something like "this is time-sensitive, so provide me a brief answer."

🚀 From Frustrated User to AI Power User

It's pretty much like talking to a really smart friend who is available 24/7 about any given topic. You can ask the most absurd or edge case questions without any judgment.

Since switching to voice interactions, my understanding of complex topics has become much more refined. I've gotten a good foundational understanding of vector databases, a topic I knew almost nothing about a few weeks ago.

But honestly the best part is that you're not married to your desk. You can have a casual conversation during your daily walk. You burn calories and learn something new, two birds with one stone.

🧑💻➡️🧑🤝🧑 Going from User to Collaborator

Voice interaction fundamentally changes how we interact with AI. When you type to AI, you're basically providing instructions with limited context, limiting the output alignment to your actual thought processes. Speaking clears up this context misalignment and lets you work as a collaborator with AI towards a shared goal.

The interaction feels more natural and conversational. Since I'm able to ask all the edge case questions I can think of, I actually get a much better understanding of foundational concepts.

Try this experiment, open your favorite AI application. Identify a topic you want to learn or a problem you need to solve; instead of typing, activate the voice input and begin speaking. Explain the context, your confusion, what you've already tried, what success looks like, just following the natural flow of your thoughts.

💬 What personal use case do you think would benefit from voice input? Share your insights, and let’s continue the discussion.

🔎 If you found this read interesting, check out some similar articles:

🗞️ Interested in staying up to date with latest news around tech and business but not getting enough time?

The Weekly Cache has you covered! It’s our weekly news digest that rounds up all the important news stories in tech, markets, and, business in a crisp, sub 5-minute read. Check out last week’s news stories:

I’m a big fan of the voice modes too. I started using it initially while driving and wanting to talk thru something or to keep chatting through a project and it was great.

The experience of chatting though problems with AI helped me realise I actually like talking thru ideas when I’m not at my computer. Somehow it just feels like I can think clearer and more creatively. And now I’m finding sometimes I don’t even want the AI to engage, I just talk out loud to myself using an AI note taker and it helps me figure stuff out, which I realise sounds a bit bonkers, but I swear it helps.

Really thought-provoking piece — it invites us to imagine AI not just as a tool, but as a conversational companion. Technology like Spatial Hearing + Cognition AI is precisely what will turn that into a natural, intuitive experience.